Machine Learning Services

Machine Learning Services in SQL Server is designed to support data science tasks without exposing your data to security risks or moving data unnecessarily.

Key benefits include:

- Avoids data movement and data risk by bringing analytics to the data

- Integrates the best features of open source with Microsoft's enterprise capabilities (revoscale and microsoftml libraries).

- Easy to deploy and consume predictive models

- Integration with existing workflows

- Unified governance across analytics and storage

In-database Analytics

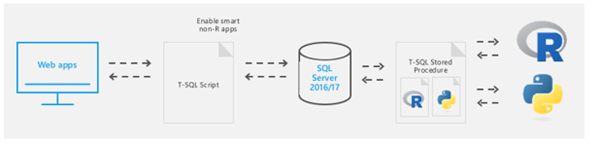

There are two platforms introduced by Microsoft to integrate with open source R/Python languages with business applications:

- SQL Server Machine Learning Services (In-Database) supports both R and Python for in-database analytics

- Microsoft Machine Learning Server supports R and Python deployments on Windows, Linux, HDInsight Spark and Hadoop clusters.

This paper talks about deploying models using SQL server machine learning services. One key benefit of Machine Learning services is bringing the compute to the data by allowing R/Python to run on the same computer as the database. With this new functionality you can train models, generate plots, perform scoring, and securely move data between SQL Server and R or Python.

In-Database R/Python Integration

With the full support of in-database Python/R in SQL Server, the SQL Server developers now have access to the extensive Python/R ML and AI libraries from the open source ecosystem along with the latest packages from Microsoft (revoscalepy and microsoftml libraries) for developing intelligent applications with in-database analytics.

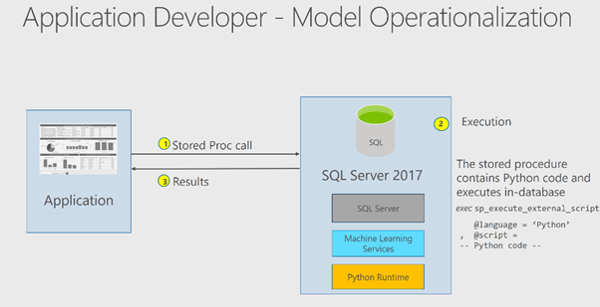

Operationalization with T-SQL

sp_execute_external_script in SQL Server enables operationalization of R/Python models by calling the scripts within simple stored procedures. Any application can use the trained R/Python models by a simple stored procedure call that connects to the SQL Server.

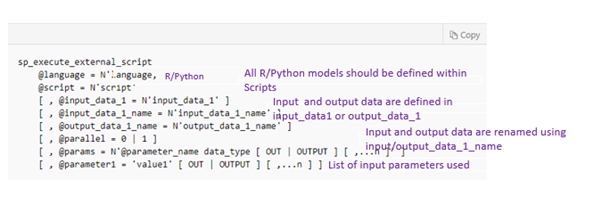

sp_execute_external_script

sp_execute_external_script enables to run R/Python script within stored procedures inside the SQL server.

To execute sp_execute_external_script, you must first enable external scripts by using the statement, sp_configure 'external scripts enabled', 1;

Creating a Predictive Model

The following steps describe how to train a model using Python, and then save the model to a table in SQL Server.

Case Study: Predicting Customer Churn

We took a common challenge across many industries which is the inability to predict which customers are about to churn. With such information, companies can prevent customer churn by proactively running promotions or other interventions.

About the Data

The data that was used for this tutorial includes finance data containing customer account level details and the customer demographics. The customers were segmented into three levels (Dormant, Running and Closed).

Step 1: Visualize and Explore the Data

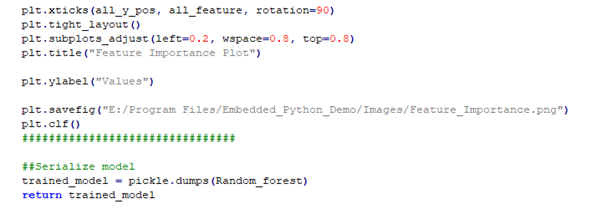

In this step, you can plot the data, explore it and begin to draw some conclusions. The images are saved in the system where the SQL SERVER will be installed. In our example, the image is saved inside the E drive.

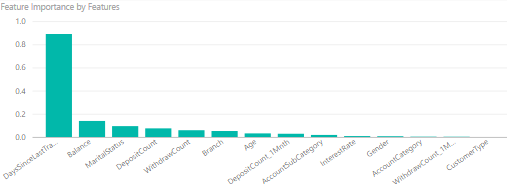

FEATURE IMPORTANCE GRAPH

The plot below helps us to identify the most important variables that are required to build the model with high accuracy. While building a model we should ensure that the top features are present in the input data.

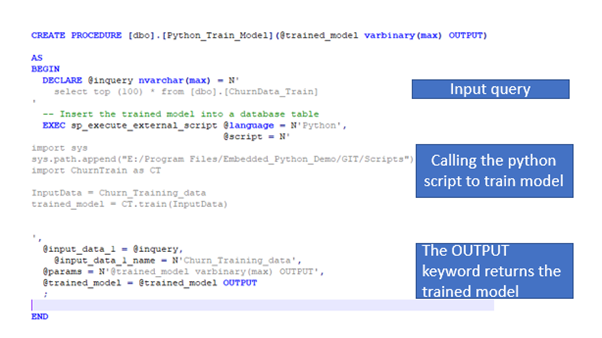

Step 2: Train the Model

The target table contains 3 customer segments that we need to predict. Hence this is a classification problem where we need to classify customers based on the customer account details along with their demographics.

To build the model, you define the formula inside your R code, and pass the data as an input parameter. The stored procedure contains a definition of the input data, so you don't need to provide an input query.

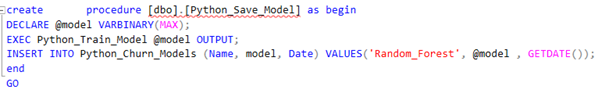

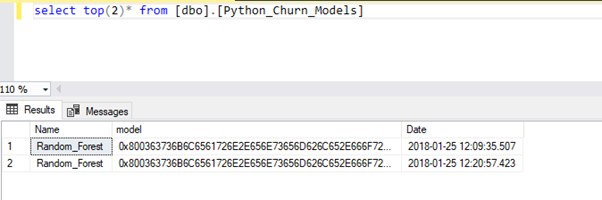

Step 3: Save the Model

Run the following SQL statements to insert the trained model into the table. We can also add some evaluation metrics in the table.

Processing of the data and fitting the model may take a few minutes. Messages that would be piped to Python's stdout stream are displayed in the Messages window of Management Studio. The errors within the python scripts are also shown in the message tab.

When you print the model using the select query, you can see that a new row has been added, which contains the serialized model in the column model.

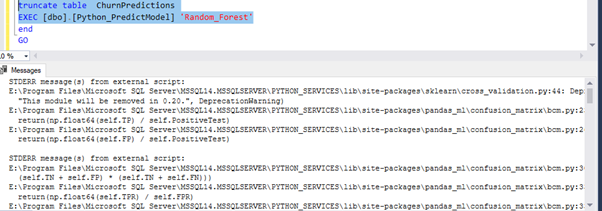

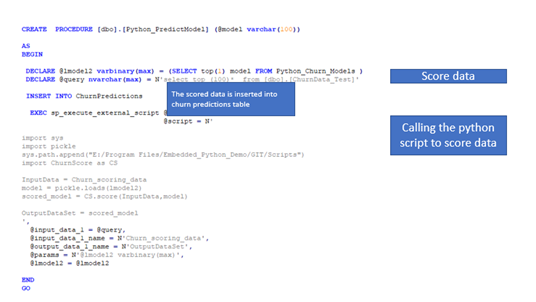

Step 4: Operationalize the Model

In this step, you will learn how to operationalize the models that you trained and saved in the previous steps.

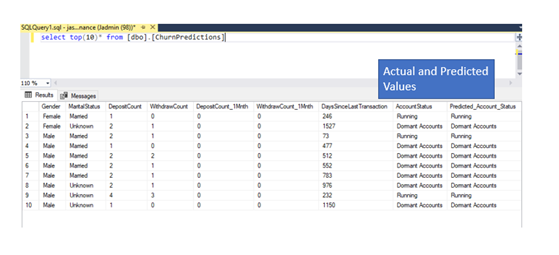

Pick the best model that was saved in the table and score it against the original data which can be either batch or real-time scoring.

On successful execution of this stored procedure, the scoring data is loaded into the Churn Prediction table and this data can be used for targeting specific sets of customers for promotions.

Some Scenarios using SQL Server

Below we illustrate different sets of scenarios available in deploying the model depending on computing environment, data availability, etc.

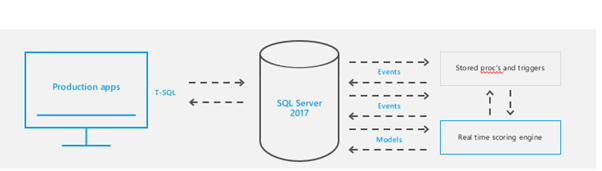

SCENARIO1: Score Big/Fast data in Real Time

This scenario is used when we want to train our models in high computing environments like Hadoop/HDInsight and the predicted results are directly fed into the production apps through T-sql that calls the model (pickle file). It is useful for scoring data with minimum latency since the scoring is happening at the edge node.

SCENARIO2: Build and Train models within SQL Server

This scenario is generally used when we want to make use of SQL Server to the fullest. Here, we train and score the models using the T-sql stored procedures. Since the models are captured in the tables periodically, we can effectively handle model management.

Conclusion

In this tutorial, you have learned how to work with Python code embedded in stored procedures. The integration with Transact-SQL makes it much easier to deploy Python models for prediction and to incorporate model retraining as part of an enterprise data workflow. Also, we have seen sample scenarios that can be used in real time scenarios.