Since the release of Azure Data Factory V2, I have played around with it a bit, but have been looking for an opportunity for a real world use case where V2 would be better suited than V1. Working with a local bank on a Proof of Concept has provided this opportunity. As part of the PoC, we are loading XML files into SQL Data Warehouse.

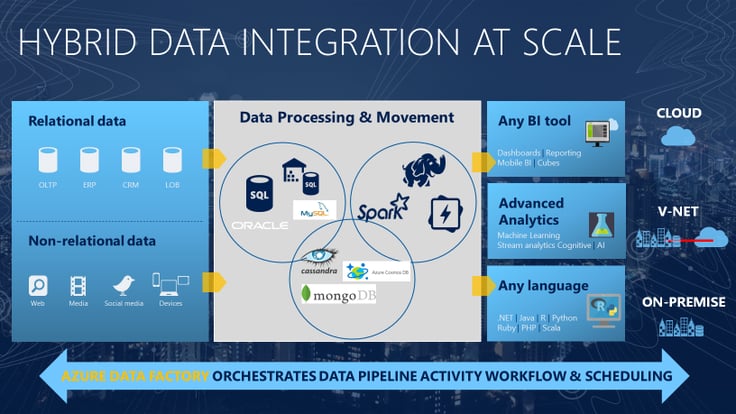

XML files are widely used, and they contain multiple entities of related data. Extracting data from XML files using Azure Data Factory V1 is still a lot of work with custom code. SQL Server Integration Services (SSIS) Packages are on the other hand, make this process much easier, and are a tried and tested solution. It is quite easy to extract from XML files and load them in to multiple staging tables with the relationships. With XML data sources being common in cloud data sets, Azure Data Factory V2 works very well for this use case.

The purpose of this exercise is to experiment on using SSIS in Azure to extract xml files data from a Azure storage container to Azure SQL Server tables.

Following are the main steps in this approach.

- XML Files are hosted in Azure storage containers as BLOB files.

- SSIS package would read the above XML files from the container using a For Each Loop Container, and extract to multiple staging tables in Azure SQL database.

- This SSIS package would be deployed in SSIS Catalog in the installed and configured Azure SQL Server.

Prerequisites: Azure subscription with sufficient privileges, SSIS 2016 or a higher version installed in a development machine with the compatible Azure Service Pack, and a SQL Server database in Azure.

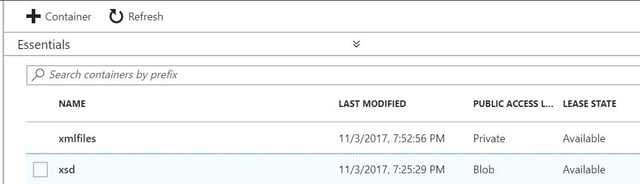

Firstly, let’s create an Azure Storage Account using Azure Portal. (Please refer to https://docs.microsoft.com/en-us/azure/storage/common/storage-create-storage-account for guidance on creating storage containers.) Create 2 storage containers named “xmlfiles” (with the access policy -> public access level as private) and “xsd” (with the access policy -> public access level as Blob) in the above created storage account.

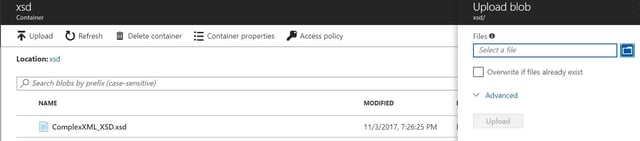

Generate the XSD file for the XML files (all xml files in this folder should have the same format.), and copy it to the “xsd” storage container created in the above step.

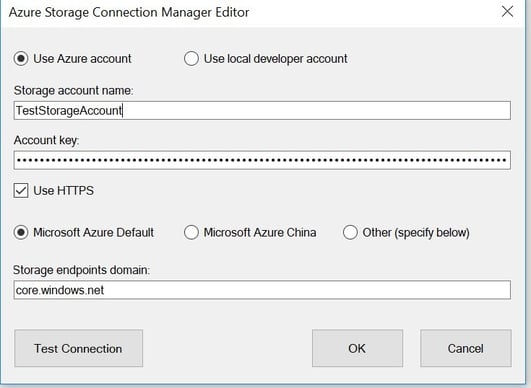

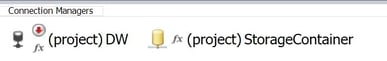

Now, let’s create a SSIS solution using SQL Server Data Tools (SSDT). This will create a SSIS solution with a new SSIS package named “Package1.dtsx”. Rename the package to “LoadXMLData.dtsx”. Create a new OLEDB connection named “DW” by right clicking on the connection manager to connect to the Azure SQL Server DB created as explained before. Use SQL Server authentication with save password option. Right click on the connection manager again, and create a new connection. Choose “Azure Storage”, and click Add to create a connection to the Azure storage container created in the above steps.

Please enter the exact Azure storage account name created under the “Storage account name” field. Copy the Account Key. (Account key of the storage account is available under “Access Keys” section of the storage account in Azure portal. Use either key1 or key2.)

Once the connection is established, right click on each connection, and convert to Project Connections.

Right click them and parameterize the connection strings in project level parameters.

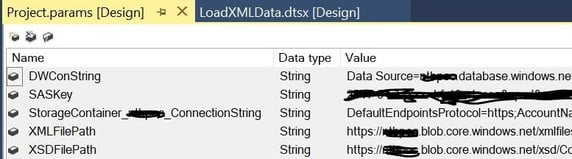

Now, add the below project parameters as well for this exercise.

DWConString is the parameter for the connection string of the SQL Server database in Azure. StorageContainer….. is the connection string of the storage account. XMLFilePath is the blob file path of the XML files. XSDFilePath is the blob path to XSD file that has the schema definition of the xml files.

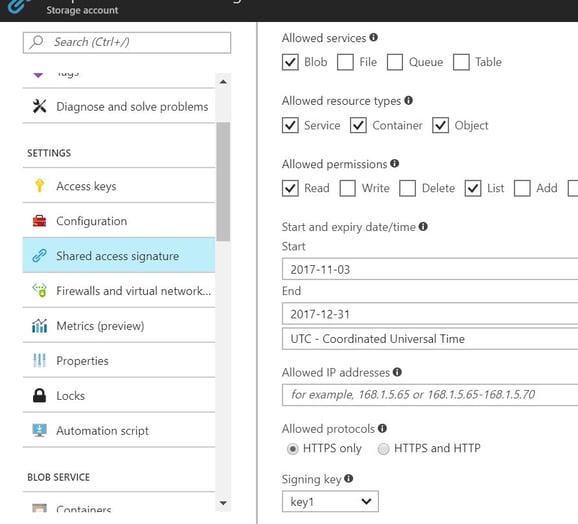

“xsd” blob container is accessible to everyone anonymously while “xmlfiles” storage container is accessible only to the owner who can view the account key from Azure Portal. Thus, to enable access to the “xmlfiles” storage container, we should generate a SAS token from Azure portal which will be stored in the above SASKey parameter in the SSIS package.

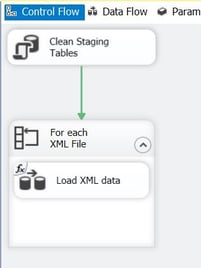

Control flow of the SSIS package should have following tasks.

“Clean Staging Tables” is an Execute SQL Task which will truncate the staging tables created in Azure SQL Server. “For Each XML File” is a for each loop container which will iterate through all xml files in the above created “xmlfiles” storage container.

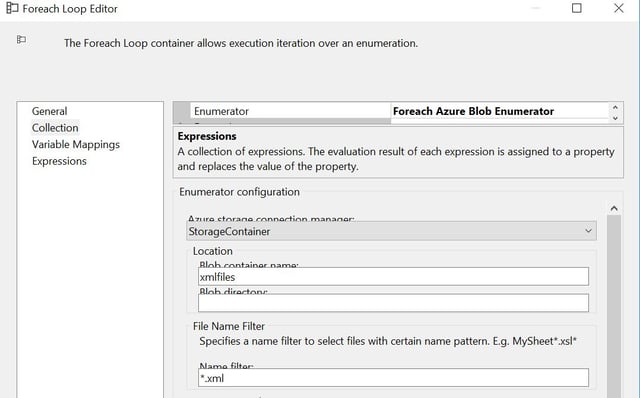

For each loop container should be configured as below by double clicking on it. Click Collection and select “Foreach Azure Blob Enumerator”.

Select the storage account connection and enter the storage container name as the above. We can use wildcard characters for file name filters.

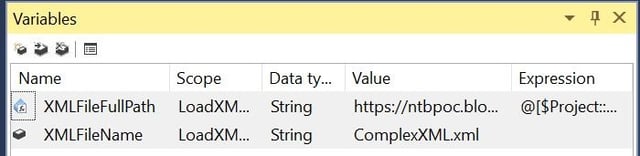

Now, let’s add the following 2 variables in the SSIS package.

XMLFileName variable will be used as the looping variable to retrieve the XML file names from the “For each Loop Container”. (It would retrieve only the file name from the blob location.)

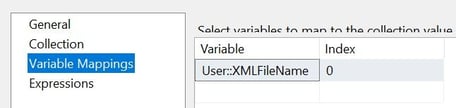

The variable should be mapped with the foreach loop container as below.

Now, to generate the full path of the xml files along with the SAS token (this is required to access the “xmlfiles” storage container since it has restricted access.) following expression is used for the “XMLFilePath” variable.

@[$Project::XMLFilePath] + @[User::XMLFileName] + "?sv=" + @[$Project::SASKey]

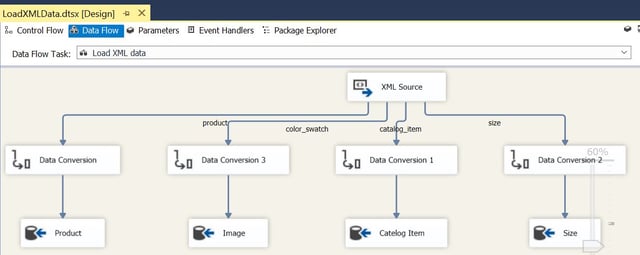

“Load Xml Data” data flow task would be configured as follows.

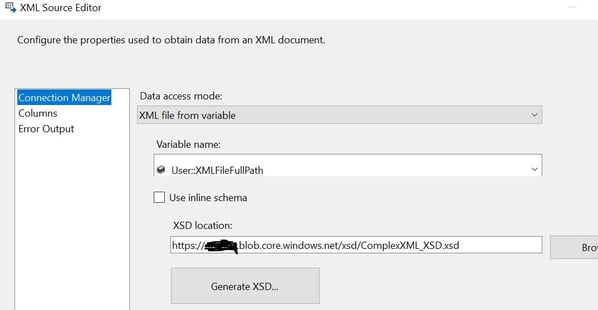

XML file used in this exercise contain 4 entities as the above, and they would be loaded to 4 separate tables in Azure SQL Server. Depending on the schema of the XML files number of entities would be different. SSIS generates foreign keys to link the data in the tables in the destination. (Data conversion steps are used to convert any incompatible data from XML to Azure SQL Server.) XML Source is configured to access XML from variable as follows.

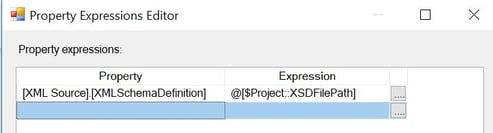

The location of the XSD could be parameterized through properties window of the data flow task using expressions as below.

Now, you can right click on the saved SSIS package from Visual studio and test for any issues. Azure SQL Server tables should be loaded with data from the xml files if successful.

Install and configure Azure-SSIS Runtime. Please refer to the blog “Deploying SQL Server Integration Packages in Azure” for details on deploying and configuring SSIS in Azure. Deploy the SSIS package in Azure SSIS Catalog using project based deployment. Right click on the deployed SSIS package to run and test.

Extraction of XML file data is a common requirement in ETL even for cloud based solutions. Such extractions from XML files is quite easier in SSIS than Azure data factory as of now. Many developers are familiar with SSIS. Azure Data Factory V2 now enables deploying SSIS packages in Azure. Thus, we can run the SSIS packages in Azure extracting XML files from Azure blob storage containers, and loading them to Azure SQL server tables as explained in this blog.