Some of the biggest hurdles in speech to text transcription are the speaking styles and accents, vocabulary and noise. In order to overcome these challenges, we make use of the Microsoft Custom Speech Service. It enables the creation of customized language and acoustic models tailored to the application and the speakers to make the process more efficient and accurate.

In this article we talk about the use of custom acoustic models in the Microsoft Custom Speech Service to better transcribe speech into more meaningful text.

Once again we are making use of a recorded conversation between President Trump and Omarosa, this time to understand the concept of acoustic models. To listen to the audio file, please click on the link below.

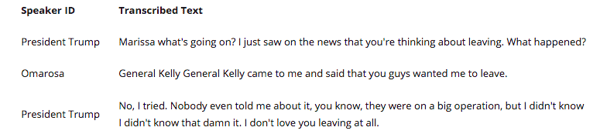

Using the solutions described in Part 1 of this walkthrough series, the model ended up with the following transcription of the call snippet:

Using the solutions described in Part 1 of this walkthrough series, the model ended up with the following transcription of the call snippet:

Figure 1: Call Snippet Transcription

Notice that ‘Omarosa’ is transcribed incorrectly as ‘Marrisa’. The initial transcription models could not understand the acoustics of the word accurately enough to make that transcription correctly.

This is where custom acoustic models come into the picture. We start by taking a corpus of audio clips which have the spoken form of ‘Omarosa’ and its equivalent transcription and use that to train a custom model. The custom model then learns from this data.

We use the following piece of code to access the trained model and get the result. It calls the Microsoft Custom Speech Service and passes the audio clip to it to receive a response.

# Defining the custom speech service

def custom_speech(callSnippet):

request = urllib.request.Request("https://westus.stt.speech.microsoft.com/speech/recognition/dictation/cognitiveservices/v1?", callSnippet)

response = urllib.request.urlopen(request)

custom_audio_resp = json.loads(response2.read().decode('utf-8'))

# Callinng the custom speech service

custom_speech(callSnippet)

Multiple iterations of training and testing are required to train the model to get to the desired accurate output. Below, we show the results of two iterations: an intermediate one with slightly better results than the original transcription and a second one which was able to transcribe the audio accurately.

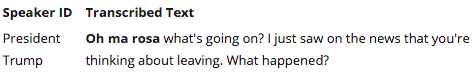

Iteration 1: Intermediate Result

Figure 2: Intermediate results

It can be seen from the result above (Figure 2), ‘Marrisa’ is now transcribed as ‘oh ma rosa’ which is much closer to ‘Omarosa’ than it was earlier, which means the model is getting better!

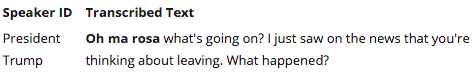

Iteration 2: Final Result

Figure 3: Final results

With sufficient training, the custom model improves, and the model combined with some custom fuzzy rules gives an improved result which can be seen in Figure 3.

Conclusion

Custom acoustic models are effective methods to provide more accurate and therefore more meaningful transcriptions. It stems from training a sufficient volume of data to use that trained model for transcription. This ultimately helps make the downstream processes, such as text analytics, more accurate.