The blog post How Just Listen Works describes the basic working model of the Just Listen voice analytics solution. This time we dive into the details behind the speech-to-text aspect of voice analytics with the help of an interesting example: the Omarosa tapes.

First, let’s define the 3 basic processes that form the building blocks of speech-to-text technology:

- Speaker recognition or diarisation which is the process of identifying the individual speakers in an audio segment

- Transcription which is the systematic representation of language in a written form

- Speaker identification which is about attributing the speech segments to specific personalities

In selecting technologies for Just Listen, we took into consideration the following:

- Ease of use: The availability of programming language specific libraries or REST APIs with clear documentation for ease of implementation

- Multi-language Capability: We needed multi-lingual capabilities for Just Listen so the speech-to-text service had to support a range of languages other than English

- We also looked for a robust service that could provide good results even when exposed to complexity in the audio file (noise, multiple speakers talking at the same time, quality issues, etc) and accuracy of the transcript generated. Some of the existing solutions in the market handle this by providing multiple voice models targeting different audio profiles.

Let’s try out the speech-to-text capability of Just Listen using the audio clip below, a conversation between US President Donald Trump and Omarosa.

Speaker Recognition

For speaker recognition, we use an open source library pyAudioAnalysis to identify the two speakers in the clip, President Trump and Omarosa. This library uses feature extraction and clustering techniques to group short segments of the audio. The advantages of this library are that it is language independent and fast. It is also easy to implement in our program.

from pyAudioAnalysis import audioSegmentation as aS

results = aS.speakerDiarization( audio, n_speakers = 2, plot_res=False)

The results from the function will give us three lists: speaker IDs, start and end times. Each corresponding element in the lists represent a continuous segment of audio in the original clip. For our audio, 3 segments were found:

speaker_ids = [1, 0, 1]

start_times = [0.0, 4.7, 9.6]

end_times = [4.7, 9.6, 21.8]

Transcription

Once the different segments have been identified, the audio clip can be broken up into chunks. Each chunk is then transcribed, and the resulting text can be sent for text analytics using various Natural Language Processing (NLP) methods.

In this example, Google Speech-to-Text service is used to perform the transcription. To make use of the service, you sign up for the service where authentication keys will be provided. These keys are to be used together with Python libraries. In the code block below, we show here a simplified example on how the Google library is used.

from google.cloud import speech

from google.cloud.speech import types

# Authentication keys are defined before this

client = speech.SpeechClient(credentials=scoped_credentials)

# Loads the audio into memory

with io.open(file_name, 'rb') as audio_file:

content = audio_file.read()

audio = types.RecognitionAudio(content=content)

# Define configuration for the transcription

config = types.RecognitionConfig(

encoding = enums.RecognitionConfig.AudioEncoding.LINEAR16,

sample_rate_hertz = 8000,

language_code = 'en-US',

enable_automatic_punctuation = True,

model = 'phone_call' )

# Sends audio for transcription. Results are in the response variable

operation= client.long_running_recognize(config, audio)

response= operation.result(timeout=500)

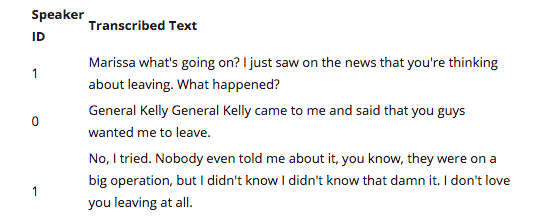

Speaker recognition and transcription for our audio clip gives us the following results:

The speaker recognition library was able to identify which parts of the conversation were spoken by President Trump and Omarosa. This is not surprising given that to human ears, the two voices are distinctive in their aural properties.

Subjectively, the transcription here is quite accurate with only a few words missing or transcribed erroneously (i.e. Marissa instead of Omarosa). This is probably due in large part to the good audio quality with no significant noise distorting the speakers’ words.

Speaker Identification

The next logical feature is speaker identification. In our example, we would like to automatically identify the speakers of the audio clip to be either President Trump and Omarosa. In a Call Center scenario, we would want to identify which parts of the conversation are spoken by the agent and the customer. This will allow us to do a more accurate analysis of the content of the call. For example, we can audit the compliance requirements only on the agents’ side and perform a more in-depth study on the customers’ response.

Identification is very easily done by a human but not so much by an automated system. General speech models are built to recognize all types of voice profiles. To identify a specific person in a speech requires building a custom voice model. This is usually done by providing audio samples of the target person together with the text equivalent. You can read about this aspect of the solution in this blog post.

Notes on Performance

- No one service provides the best overall results. For example, we may want to use the speaker recognition from IBM while using the transcription results from Google. Or we can use Microsoft for audio in English and a different combination for another language.

- Results are highly dependent on audio quality. This may require some audio pre-processing like background noise attenuation to optimise the results. It may be difficult to define a fixed flow if there is a wide range of input audio quality.

- Processing time may increase based on the services used. This includes latency in function calls to online services and processing overheads. This is not much of an issue in a batch processing scenario but is of concern in real-time application.