In Part 1 of this Technology Walkthrough, we focused on speech-to-text processing and showed how we can segregate a given audio clip into its constituent speakers represented by alias tags and start and end times.

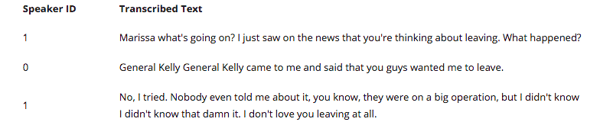

Results are shown in Figure 1 below.

Figure 1: Before Speaker Identification

We are using the conversation between Omarosa and President Trump call to illustrate this example. You can listen to the audio clip by clicking on the link below:

Speaker Identification

As mentioned in the earlier blog, it is necessary to identify from the audio, who the actual speakers are, to give more context to the audio and to gather more insights about the speakers.

To do this, we train a custom model or service with audio samples belonging to the respective speakers we want to identify in the audio clips. The code snippet below shows how the file is trained using the speaker identification service from Microsoft.

# Importing required libraries

import IdentificationServiceHttpClientHelper

import sys

# Defining profile enrollment function

def enroll_profile(subscription_key, profile_id, file_path, force_short_audio):

helper = IdentificationServiceHttpClientHelper.IdentificationServiceHttpClientHelper(

subscription_key)

# Sending the audio clip and the profile identifier to the service for enrollment

enrollment_response = helper.enroll_profile(

profile_id,

file_path,

force_short_audio.lower() == "true")

# Getting the response of the enrollment status

print('Enrollment Status = {0}'.format(enrollment_response.get_enrollment_status()))

if __name__ == "__main__":

# Calling the enroll function

enroll_profile(sys.argv[1], sys.argv[2], sys.argv[3], sys.argv[4])

These audio clips, once trained, become the voiceprints of the speakers in the model, which are used to identify speakers on new audio clips. The speaker identification models try to identify different speakers based on characteristics of the voice, or voice biometrics. It is essentially a 1:N match wherein the model tries to identify an unknown speaker from a huge number of audio templates. The technologies used to create the model consist of a mix of neural networks, Gaussian Mixture Models (GMMs), pattern matching algorithms and other voice models.

For our solution, we trained a model with voices from both Omarosa and President Trump and ran it on this audio file to try and identify the two speakers. The code snippet below is used to access that model and identify the speakers.

# Import required libraries

import IdentificationServiceHttpClientHelper

# Defining the identification function

def identify_file(subscription_key, file_path, force_short_audio, profile_ids):

helper = IdentificationServiceHttpClientHelper.IdentificationServiceHttpClientHelper(

subscription_key)

# Calling the service to identify the speaker on the audio clip

identification_response = helper.identify_file(

file_path, profile_ids,

force_short_audio.lower() == "true")

print('Identified Speaker = {0}'.format(identification_response.get_identified_profile_id()))

print('Confidence = {0}'.format(identification_response.get_confidence()))

if __name__ == "__main__"

identify_file(sys.argv[1], sys.argv[2], sys.argv[3], sys.argv[4:])

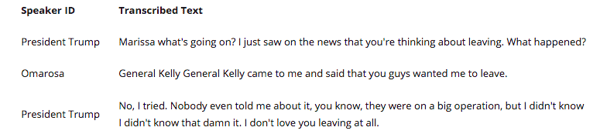

The results from the model and code snippet can be seen below.

Figure 2: After Speaker Identification

We can see that the model is clearly able to understand, differentiate and identify both the speakers.

Next Steps

Once you know who the speakers are, as opposed to just ‘0’ and ‘1’, we can add value to the audio, text and all further downstream operations. We can begin to analyze and derive useful insights from the transcription in the form of entities, sentiments, tones and other such features.

You can read about how these analyses can be made in Part 3 of this technology walkthrough.