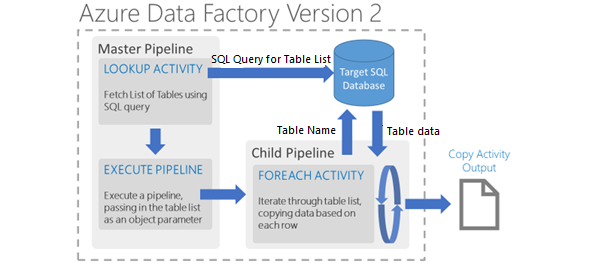

For a recent project, we had to copy data from ERP systems in 3 countries to Azure SQL. With each instance of the ERP application having more than 70 tables, using the traditional method for defining data sets and copying data would be too tedious. In ADF v2, using certain parameters, we were able to make this entire process dynamic and all it took was 1 day for all this to work.

In ADF v1, for each table we have only one data set. This means that for every data set there will be a corresponding table. Multiplying this by the number of instances of the ERP system as well as with multiple versions of the source tables, the amount of components that we have to deal with is extremely large.

With ADF v2, we do not need to do that as we can use parameters instead. We can set the table name along with the column set as parameters. The benefit of doing this is that we need only one data set for each database source. This makes the process entirely metadata driven. With a single pipeline and some metadata, we can handle data from multiple countries, multiple versions of the source and from multiple source tables.

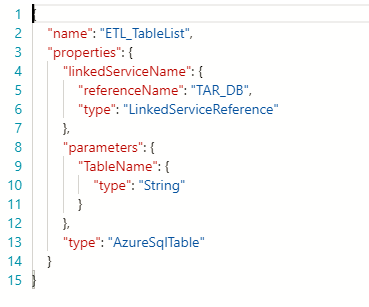

Firstly, we need to configure a dummy table for the data set. JSON code will look like this:

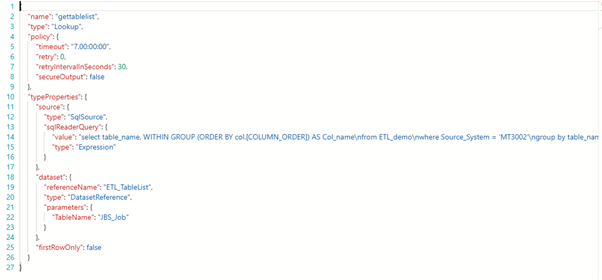

We create a new pipeline with the first activity being a Lookup. Then we use SQL statements to get the table name and the column name.

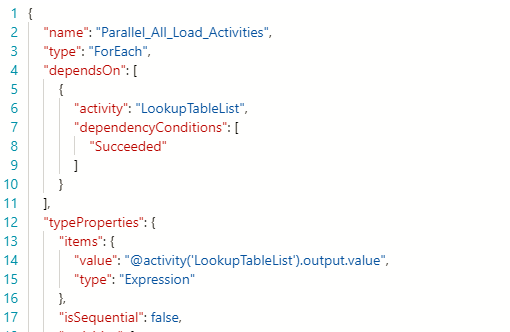

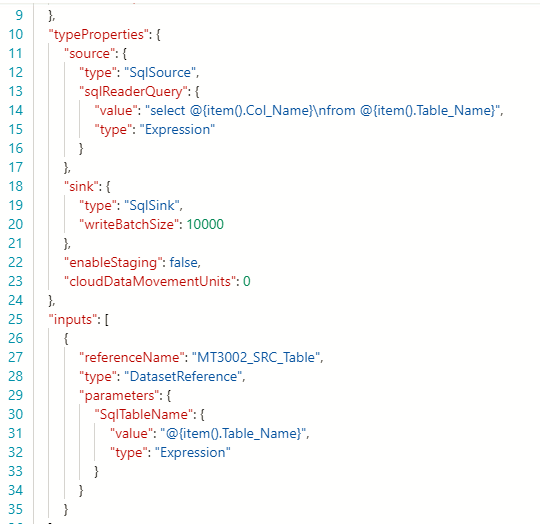

Please note that we need to return all col_name with only one row as string. Then we use Foreach activity, to loop each row of output to generate the sourcing SQL statement. Inside typeProperties, we set isSequential as false and then set the loop as Async. This loads data in parallel for all tables.

Finally, we perform the Copy activity inner to each dataset to copy data in every table. Note that SQL query and table name for input and output data set will generate based on the value of Items.

Using this simple pipeline, we can easily integrate a new data source and make changes in our application without spending much. For the ETL solution, the configuration table can be easily updated using simple SQL statements.